Comparing Estimators

During this module, we have been mostly using only one or two classifiers and regressors. This has been done in order to keep the lessons easy to follow. In practice, one of the first problems you face is selecting the estimator (or the algorithm).

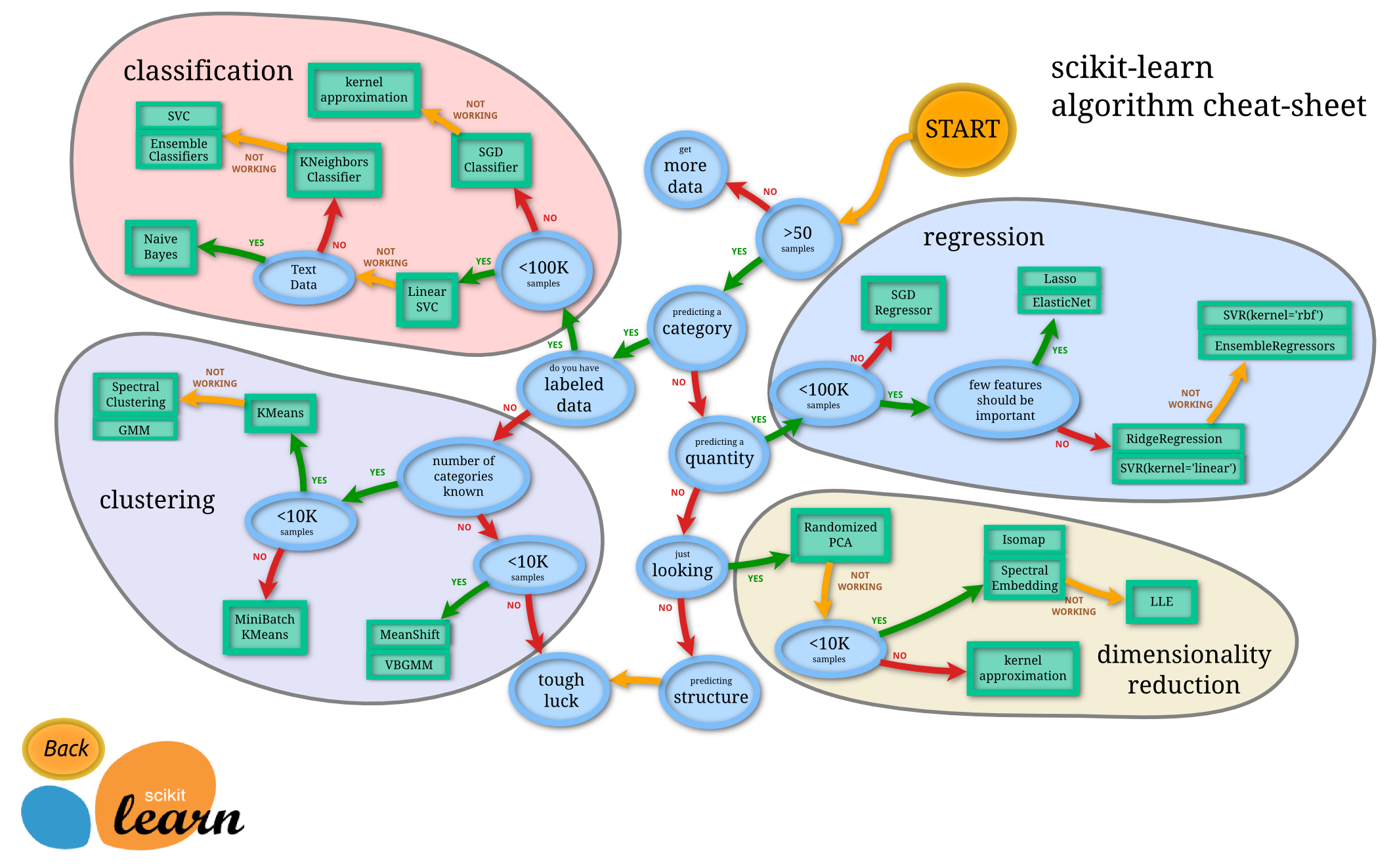

Above is a map that can be used as a starting point. You can find the version with links to the documentation from Scikit Learn's website.

There are even AutoML solutions that aim at automating various tasks in the process. Using them is beyond the scope of this book, but spend at least 30 minutes investigating the topic after this lesson. Some keywords to get you started: auto-sklean, H2O, Google AutoML, Azure AutoML. Try to also find some debate about the topic. Does somehow think that AutoML will replace data scientists? Why would or wouldn't it?

Supervised Learning Workflow

- Data acquisition

- Gather the data.

- Depending on the task, the data may be structured (e.g. tabular data in CSV files) or unstructured (freeform text, digital images)

- Data Analysis

- Visualize and analyze the data.

- Clean the Data

- Select features to keep

- Create new features

- Decide how to handle missing data

- Standardize

- Maybe create a transformation Pipeline?

- Train estimator

- Choose a selection of estimators that might perform well.

- Train them and compare the results.

- Select the best-performing model.

- Hyperparameter optimization

- Use grid search or similar methods.

- Aim at good balance between bias and variance.

- Final model validation and deployment.

Note that the process is not as linear as it might seem. You will, most likely, end up refining the prior steps in the list based on the knowledge you've acquired on the latter steps.

Extension Task

This task is optional, but you should at least take a glance at the book even if you find the content too academic for your taste. Open up the saved PDF book, A Brief Introduction to Machine Learning for Engineers (v3, 2018), which is available here. You have already started the chapter 2; read it through. You will notice that we have been focusing on frequentist approach. There are also supervised learning algorithms based on Bayes' theorem. Scikit-learn has these implemented in a module naive_bayes. Feel free to read the chapters 3 and 4 too.

Conclusions

In the Jupyter Notebook exercise, you will get to train a few classifiers using the Iris dataset. When using Pipelines and for loops, you don't actually need that many lines of code! Of course, we have to keep in mind that the Iris dataset is fairly clean to start with. It doesn't require a lot of data preprocessing.