Pipelines

By now, you have learned many data preprocessing and machine learning techniques, but we haven't yet discussed the actual workflow: how to make your code easy to read and maintain? One helpful solution to this is a Scikit Learn modules Pipeline and Compose.

Some of the useful classes is each are:

- Compose module:

- ColumnTransformer, which applies transformers to columns of an array or pandas DataFrame.

- Pipeline module:

- Pipeline class, which applies a list of transforms and a final estimator.

- FeatureUnion class, which concatenates results of multiple transformer objects.

In future, the contents in the Pipeline module will likely be moved into the Compose module and be refurbished.

Note: FeatureUnion is very similar to the ColumnTransformer, except that it doesn't perform any column selection by default. Check the Scikit Learn documentation for its basic usage and compare it to ColumnTransformer during this lesson.

What is a transformer?

ColumnTransformer, FeatureUnion and Pipeline all use transformers. Any transformer is required to, as Pipeline documentation states, "…implement fit and transform methods."

We have already used some transformers, such as:

- OrdinalEncoder

- OneHotEncoder

- PolynomialFeatures

- StandardScaler

If we need to perform custom data preprocessing, such turning names in Titanic dataset into titles, we can create our own transformers. Below is a basic example:

from sklearn.base import BaseEstimator, TransformerMixin

class YourOwnTitanicParser(BaseEstimator, TransformerMixin):

def __init__(self):

pass

def fit(self, X, y=None):

return self

def _split_title(self, cell):

cell = cell.split(",")[1]

cell = cell.split()[0]

return cell

def transform(self, X, y=None):

X["title"] = X["name"].apply(self._split_title)

X = X.drop(columns="name")

return X.values

Normally, we will use this class in a Pipeline instead of manually calling it, but we can test it anyways:

nameparser = YourOwnTitanicParser()

titanic_parsed = nameparser.transform(titanic)

The transform will return NumPy array of the values in the Dataframe. Title has been added, the name has been removed.

Now, a good question is that is this approach necessary. What do we win compared to the old method of calling a function after another? It depends. In some cases, you might want to use an transformer parameter as a hyperparameter when you are performing Grid Search. Code readability might increase by using transformer classes. Also, some of the custom transformers might even be useful in multiple projects, if the data in many projects of a similar formatting (e.g. email addresses, post codes, lat-long coordinates or other that you need to parse).

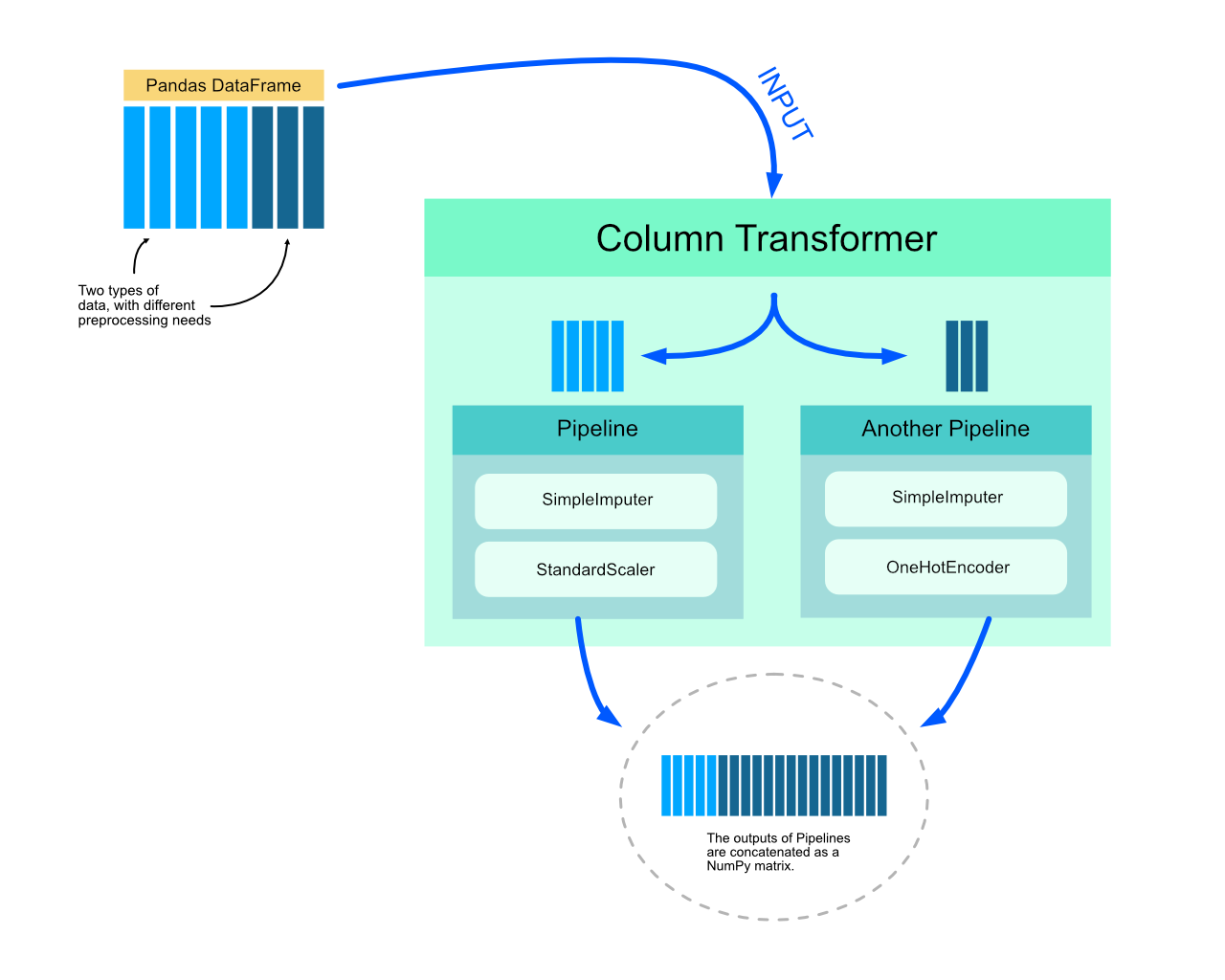

Column Transformer

Often, your dataset will consist of mixed feature and data types, and they require separate operations. For example, you might want to add polynomial degrees to some features, but not to all. Also, you might have a mixture of categorical features (e.g. strings like ["cat", "dog"]) and numerical features (e.g. height or weight).

As illustrated above, the Column Transform will run multiple Pipelines with different transformers. The outputs of each will be concatenated together. Note that the dimensionality of the dataset can (and often will) change. In this example, the OneHotEncoder would add dummy variables, thus increasing the volume of features.

If the transformers you are using have input parameters, you can add those into the GridSearchCV's parameter grid. You will learn more about this in the Notebook and in the Scikit Learn documentation.

Conclusions

Pipelines can either add or remove complexity based on what kinds of preprocessing steps are required.

- If your dataset requires fairly everyday preprocessing steps (OneHotEncoding, StandardScaling), Pipelines will most certainly increase the readability of your code.

- …on the other hand, if the dataset requires a lot of complex parsing and checking, you have at least two options:

- Perform complex data preparation first. Then, as a final step, add Pipeline for the most standard steps (e.g. StandardScaling).

- Write a custom transformer class for each required task. Consider giving each transformer its own pipeline. Benefit of this approach is that you can add transformer parameters for grid search as hyperparameters.

Advanced use of Pipelines is beyond the scope of this course. Understanding the basics is a necessity. Otherwise you might find it impossible to follow some parts of the Scikit documentation or other online sources.